In this article we’ll configure worker node autoscaling for a Kubernetes cluster deployed with Contaier Service Extension 4.0.3. This feature is very useful when using HPA (Horizontal Pod Autoscaling). It allows you to deploy additional Worker nodes according to the load required by your applications.

Requirements

- A working Kubernetes cluster deployed with Container Service Extension

- Some knowledge of Kubernetes

Test environment

1

2

3

4

|

k get node

NAME STATUS ROLES AGE VERSION

k8slorislombardi-control-plane-node-pool-njdvh Ready control-plane,master 21h v1.21.11+vmware.1

k8slorislombardi-worker-node-pool-1-69f68cc6b9-kfhz9 Ready <none> 21h v1.21.11+vmware.1

|

- 1 Master node : 2vCPU ; 4Go RAM

- 1 Worker node : 2vCPU ; 4Go RAM

Configuration HPA

Metrics configuration

If you haven’t already done so, you need to deploy metrics-server on your Kubernetes cluster.

1

|

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

|

We need to edit the metric server deployment as follows

1

|

k edit deployments.apps metrics-server -n kube-system

|

Enable option –kubelet-insecure-tls

1

2

3

4

5

6

7

8

9

10

|

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=ExternalIP,Hostname,InternalIP

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

image: registry.k8s.io/metrics-server/metrics-server:latest

imagePullPolicy: IfNotPresent

|

HPA test

From the following template, we will configure a deployment and associate it with an HPA policy.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

|

# hpa-test.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: hpa-example

spec:

replicas: 1

selector:

matchLabels:

app: hpa-example

template:

metadata:

labels:

app: hpa-example

spec:

containers:

- name: hpa-example

image: gcr.io/google_containers/hpa-example

ports:

- name: http-port

containerPort: 80

resources:

requests:

cpu: 200m

---

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: hpa-example-autoscaler

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: hpa-example

minReplicas: 1

maxReplicas: 100

targetCPUUtilizationPercentage: 50

|

1

|

k apply -f hpa-test.yaml

|

Check

1

2

3

4

5

6

7

8

9

10

11

12

13

|

k get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

hpa-example 1/1 1 1 3m21s

k get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-example-autoscaler Deployment/hpa-example 0%/50% 1 10 1 28m

k get pod

NAME READY STATUS RESTARTS AGE

hpa-example-cb54bb958-cggfp 1/1 Running 0 3m26s

nginx-nfs-example 1/1 Running 0 12h

|

We then create a Load-balancer VIP for our deployment

1

|

k expose deployment hpa-example --type=LoadBalancer --port=80

|

In our example, the load-balancer assigns the IP 172.31.7.210

1

2

3

4

|

k get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hpa-example LoadBalancer 100.68.137.181 172.31.7.210 80:32258/TCP 14s

kubernetes ClusterIP 100.64.0.1 <none> 443/TCP 20h

|

Load up

We are now going to ramp up our application

1

2

3

4

5

6

7

8

9

10

|

# pod-wget.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-wget

spec:

containers:

- name: alpine

image: alpine:latest

command: ['sleep', 'infinity']

|

1

2

3

4

|

k apply -f po-wget.yaml

k exec -it pod-wget -- sh

/ # while true; do wget -q -O- http://172.31.7.210;done

OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!

|

After a few minutes, you can see the HPA feature in action

Hight CPU utilization for pod

1

2

3

|

k get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-example-autoscaler Deployment/hpa-example 381%/50% 1 10 4 35m

|

You can see that the HPA is trying to create a new POD, but the CPU resources of the worker node are also too hight

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

k get pod

NAME READY STATUS RESTARTS AGE

hpa-example-cb54bb958-2sqpt 0/1 Pending 0 67s

hpa-example-cb54bb958-44k4x 0/1 Pending 0 52s

hpa-example-cb54bb958-6vd5l 0/1 Pending 0 52s

hpa-example-cb54bb958-82fb4 0/1 Pending 0 82s

hpa-example-cb54bb958-dpwwc 1/1 Running 0 40m

hpa-example-cb54bb958-ltb96 0/1 Pending 0 67s

hpa-example-cb54bb958-nsd54 0/1 Pending 0 67s

hpa-example-cb54bb958-w74fx 0/1 Pending 0 82s

hpa-example-cb54bb958-wz54z 1/1 Running 0 82s

hpa-example-cb54bb958-zrgw4 0/1 Pending 0 67s

k describe pod hpa-example-cb54bb958-2sqp

Warning FailedScheduling 11s (x3 over 82s) default-scheduler 0/2 nodes are available: 1 Insufficient cpu, 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate.

|

Enable autoscaling

This feature is not enabled by default on Kubernetes clusters deployed by CSE and is not yet implemented by VMware. Here’s a step-by-step method for configuring this feature while we wait for it to be integrated into the development roadmap.

Preparing the necessary components

Identify your cluster’s admin namespace. In this example, my cluster is named k8slorislombardi

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

k get namespaces

NAME STATUS AGE

capi-kubeadm-bootstrap-system Active 21h

capi-kubeadm-control-plane-system Active 21h

capi-system Active 21h

capvcd-system Active 21h

cert-manager Active 21h

default Active 21h

hpa-test Active 93m

k8slorislombardi-ns Active 21h

kube-node-lease Active 21h

kube-public Active 21h

kube-system Active 21h

nfs-csi Active 15h

rdeprojector-system Active 21h

tanzu-package-repo-global Active 21h

tkg-system Active 21h

tkg-system-public Active 21h

tkr-system Active 21h

|

The “admin” namespace for this cluster is therefore k8slorislombardi-ns.

All the steps below must be performed in the “admin” namespace: k8slorislombardi-ns

- Create a temporary pod and a PVC containing our kubeconfig

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

#autoscale-config.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

volume.beta.kubernetes.io/storage-provisioner: named-disk.csi.cloud-director.vmware.com

name: pvc-autoscaler

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Mi

storageClassName: default-storage-class-1

volumeMode: Filesystem

---

apiVersion: v1

kind: Pod

metadata:

name: pod-temporaire

spec:

containers:

- name: alpine

image: alpine:latest

command: ['sleep', 'infinity']

volumeMounts:

- name: pvc-autoscaler

mountPath: /data

volumes:

- name: pvc-autoscaler

persistentVolumeClaim:

claimName: pvc-autoscaler

|

Implementation and checking

1

2

3

4

5

6

7

8

9

|

k apply -f autoscale-config.yaml

k get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-autoscaler Bound pvc-775762d2-34e7-4854-823d-8f757d94437e 10Mi RWO default-storage-class-1 3m8s

k get pod

NAME READY STATUS RESTARTS AGE

pod-temporaire 1/1 Running 0 2m57s

|

- Copy kubeconfig file

1

2

3

4

5

|

k exec -it pod-temporaire -- sh

/ #

/ # cd /data

/data #

vi config

|

Copy the contents of your kubeconfig into a new file named config

You can then delete the pod-temporary

1

|

k delete pod pod-temporaire

|

- Configure worker-node pool hardware resources

1

2

3

4

5

|

k get machinedeployments.cluster.x-k8s.io

NAME CLUSTER REPLICAS READY UPDATED UNAVAILABLE PHASE AGE VERSION

k8slorislombardi-worker-node-pool-1 k8slorislombardi 1 1 1 0 Running 23h v1.21.11+vmware.1

k edit machinedeployments.cluster.x-k8s.io k8slorislombardi-worker-node-pool-1

|

We modify the parameters of the machinedeployments.cluster.x-k8s.io property to define :

- The maximum and minimum number of worker-nodes in the pool

- Worker-node hardware resources to be deployed

1

2

3

4

5

6

7

8

9

10

11

12

13

|

apiVersion: cluster.x-k8s.io/v1beta1

kind: MachineDeployment

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"cluster.x-k8s.io/v1beta1","kind":"MachineDeployment","metadata":{"annotations":{},"creationTimestamp":null,"name":"k8slorislombardi-worker-node-pool-1","namespace":"k8slorislombardi-ns"},"spec":{"clusterName":"k8slorislombardi","replicas":1,"selector":{},"template":{"metadata":{},"spec":{"bootstrap":{"configRef":{"apiVersion":"bootstrap.cluster.x-k8s.io/v1beta1","kind":"KubeadmConfigTemplate","name":"k8slorislombardi-worker-node-pool-1","namespace":"k8slorislombardi-ns"}},"clusterName":"k8slorislombardi","infrastructureRef":{"apiVersion":"infrastructure.cluster.x-k8s.io/v1beta1","kind":"VCDMachineTemplate","name":"k8slorislombardi-worker-node-pool-1","namespace":"k8slorislombardi-ns"},"version":"v1.21.11+vmware.1"}}},"status":{"availableReplicas":0,"readyReplicas":0,"replicas":0,"unavailableReplicas":0,"updatedReplicas":0}}

cluster.x-k8s.io/cluster-api-autoscaler-node-group-max-size: "5"

cluster.x-k8s.io/cluster-api-autoscaler-node-group-min-size: "1"

capacity.cluster-autoscaler.kubernetes.io/memory: "4"

capacity.cluster-autoscaler.kubernetes.io/cpu: "2"

capacity.cluster-autoscaler.kubernetes.io/ephemeral-disk: "20Gi"

capacity.cluster-autoscaler.kubernetes.io/maxPods: "200"

machinedeployment.clusters.x-k8s.io/revision: "1"

|

Deploying the autoscaler

We use the following yaml to use the previously created PVC to retrieve the kubeconfig.

Be sure to replace k8slorislombardi and k8slorislombardi-ns with your cluster parameters

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: cluster-autoscaler

namespace: k8slorislombardi-ns

labels:

app: cluster-autoscaler

spec:

selector:

matchLabels:

app: cluster-autoscaler

replicas: 1

template:

metadata:

labels:

app: cluster-autoscaler

spec:

containers:

- image: us.gcr.io/k8s-artifacts-prod/autoscaling/cluster-autoscaler:v1.20.0

name: cluster-autoscaler

command:

- /cluster-autoscaler

args:

- --cloud-provider=clusterapi

- --kubeconfig=/data/config

- --cloud-config=/data/config

- --node-group-auto-discovery=clusterapi:clusterName=k8slorislombardi

- --namespace=k8slorislombardi-ns

- --node-group-auto-discovery=clusterapi:namespace=default

volumeMounts:

- name: pvc-autoscaler

mountPath: /data

volumes:

- name: pvc-autoscaler

persistentVolumeClaim:

claimName: pvc-autoscaler

serviceAccountName: cluster-autoscaler

terminationGracePeriodSeconds: 10

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cluster-autoscaler-workload

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-autoscaler-workload

subjects:

- kind: ServiceAccount

name: cluster-autoscaler

namespace: k8slorislombardi-ns

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cluster-autoscaler-management

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-autoscaler-management

subjects:

- kind: ServiceAccount

name: cluster-autoscaler

namespace: k8slorislombardi-ns

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: cluster-autoscaler

namespace: k8slorislombardi-ns

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cluster-autoscaler-workload

rules:

- apiGroups:

- ""

resources:

- namespaces

- persistentvolumeclaims

- persistentvolumes

- pods

- replicationcontrollers

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- update

- watch

- apiGroups:

- ""

resources:

- pods/eviction

verbs:

- create

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- csinodes

- storageclasses

- csidrivers

- csistoragecapacities

verbs:

- get

- list

- watch

- apiGroups:

- batch

resources:

- jobs

verbs:

- list

- watch

- apiGroups:

- apps

resources:

- daemonsets

- replicasets

- statefulsets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- delete

- get

- update

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- create

- get

- update

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cluster-autoscaler-management

rules:

- apiGroups:

- cluster.x-k8s.io

resources:

- machinedeployments

- machinedeployments/scale

- machines

- machinesets

verbs:

- get

- list

- update

- watch

|

Check

1

2

3

4

5

6

7

8

9

10

11

|

k apply -f .\autoscale.yaml

deployment.apps/cluster-autoscaler created

clusterrolebinding.rbac.authorization.k8s.io/cluster-autoscaler-workload created

clusterrolebinding.rbac.authorization.k8s.io/cluster-autoscaler-management created

serviceaccount/cluster-autoscaler created

clusterrole.rbac.authorization.k8s.io/cluster-autoscaler-workload created

clusterrole.rbac.authorization.k8s.io/cluster-autoscaler-management created

k get pod

NAME READY STATUS RESTARTS AGE

cluster-autoscaler-79c5cb9df6-gqlfd 1/1 Running 0 29s

|

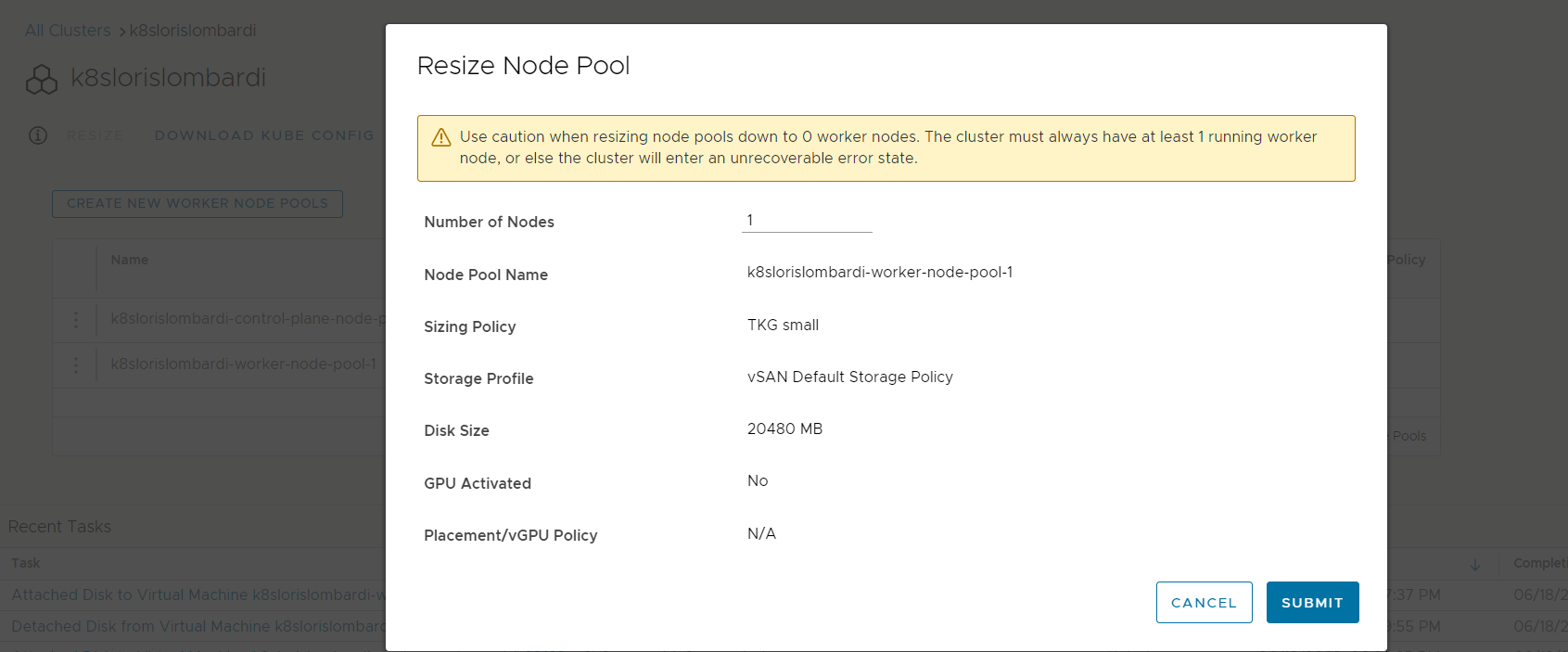

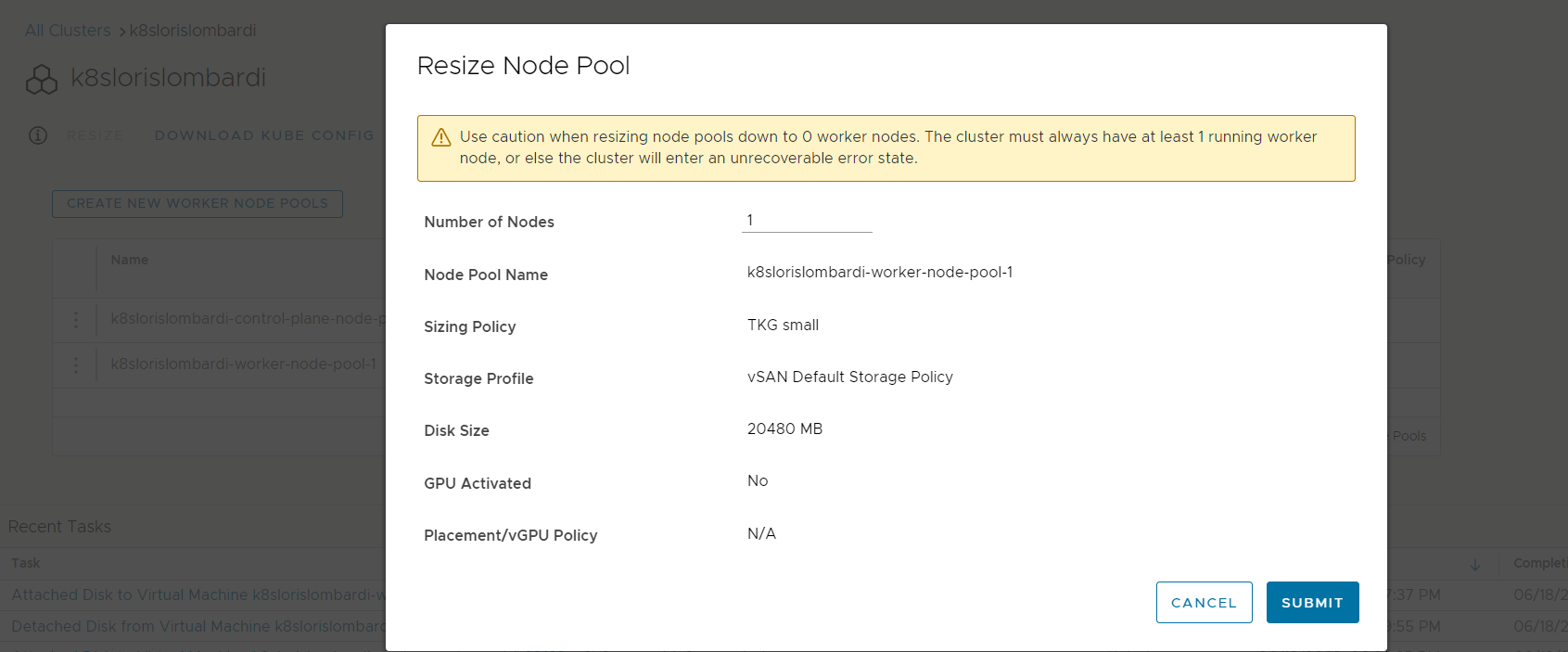

rdeprojector configuration

By default, Cloud director continuously checks the configuration of your Kubernetes cluster via the rdeprojector controller. This controller can also be used to add or remove workers from the cloud director GUI.

1

|

k edit deployments.apps rdeprojector-controller-manager -n rdeprojector-system

|

Scale down th replica at 0

1

2

3

4

5

6

7

|

spec:

progressDeadlineSeconds: 600

replicas: 0

revisionHistoryLimit: 10

selector:

matchLabels:

control-plane: controller-manager

|

Test Autoscaling

1

2

3

4

|

k apply -f po-wget.yaml

k exec -it pod-wget -- sh

/ # while true; do wget -q -O- http://172.31.7.210;done

OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!

|

As seen above, a few minutes later we can the a hight CPU utilization for the Pods and the Worker node.

1

2

3

|

k get hpa -n default

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-example-autoscaler Deployment/hpa-example 122%/50% 1 10 8 3h17m

|

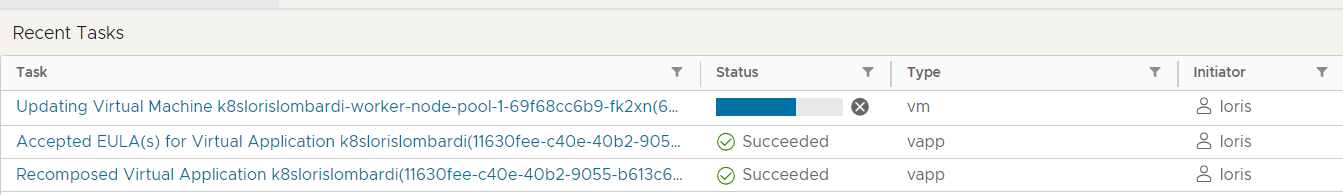

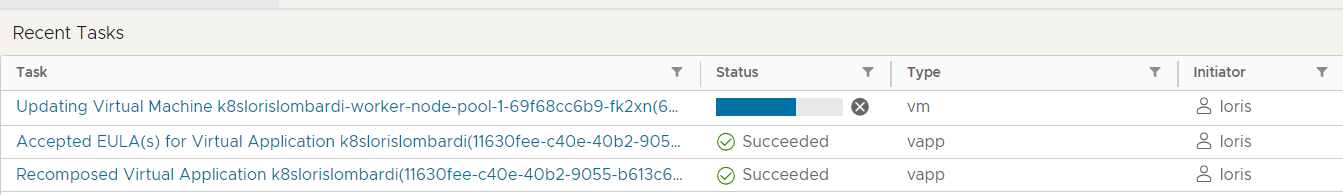

The autoscaler goes into action and deploys a new Worker-node

1

2

3

|

k get machinedeployments.cluster.x-k8s.io

NAME CLUSTER REPLICAS READY UPDATED UNAVAILABLE PHASE AGE VERSION

k8slorislombardi-worker-node-pool-1 k8slorislombardi 2 1 2 1 ScalingUp 24h v1.21.11+vmware.1

|

Automatic integration of the new Worker-node

1

2

3

4

5

|

k get node

NAME STATUS ROLES AGE VERSION

k8slorislombardi-control-plane-node-pool-njdvh Ready control-plane,master 24h v1.21.11+vmware.1

k8slorislombardi-worker-node-pool-1-69f68cc6b9-fk2xn NotReady <none> 3s v1.21.11+vmware.1

k8slorislombardi-worker-node-pool-1-69f68cc6b9-kfhz9 Ready <none> 24h v1.21.11+vmware.1

|

The new Worker is Ready

1

2

3

4

5

|

k get node

NAME STATUS ROLES AGE VERSION

k8slorislombardi-control-plane-node-pool-njdvh Ready control-plane,master 24h v1.21.11+vmware.1

k8slorislombardi-worker-node-pool-1-69f68cc6b9-fk2xn Ready <none> 42s v1.21.11+vmware.1

k8slorislombardi-worker-node-pool-1-69f68cc6b9-kfhz9 Ready <none> 24h v1.21.11+vmware.1

|

Additional pods deployed

1

2

3

|

k get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

hpa-example 10/10 10 10 3h29m

|

Source : [cluster-autoscaler]https://github.com/kubernetes/autoscaler/tree/master/cluster-autoscaler)