Since version 3.2 of NSX-T, a Kubernetes cluster is required to install NSX Application Platform, which to use NSX inteligence and other security components to be installed.

In this article, we’ll look at how to install NSX Application Platform in a Kubernetes environment deployed by Container service Extension.

Requirements:

- Functional NSX environment

- Functional CSE environment

- Some knowledge of Kubernetes

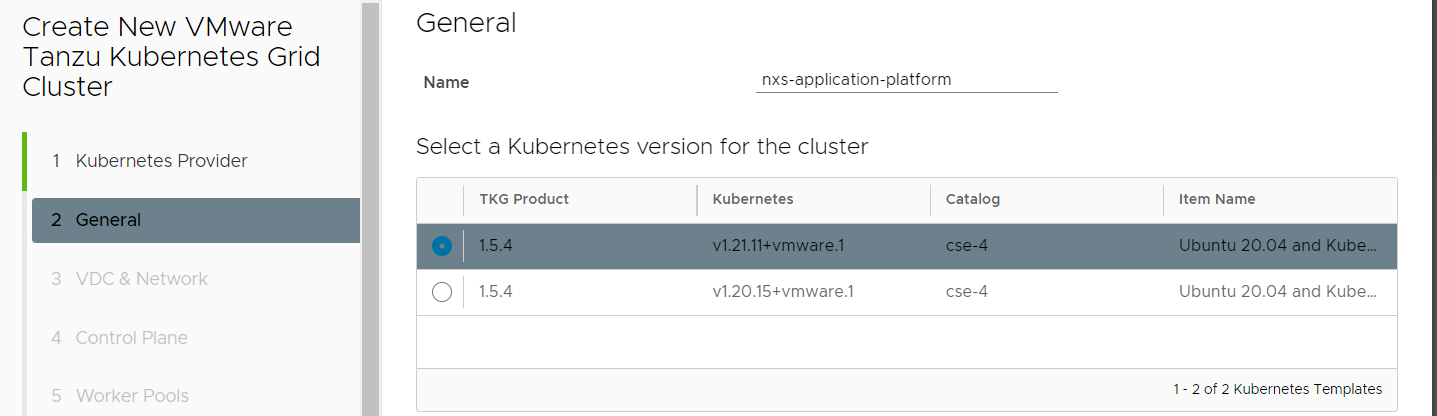

Deploying the Kubernetes cluster

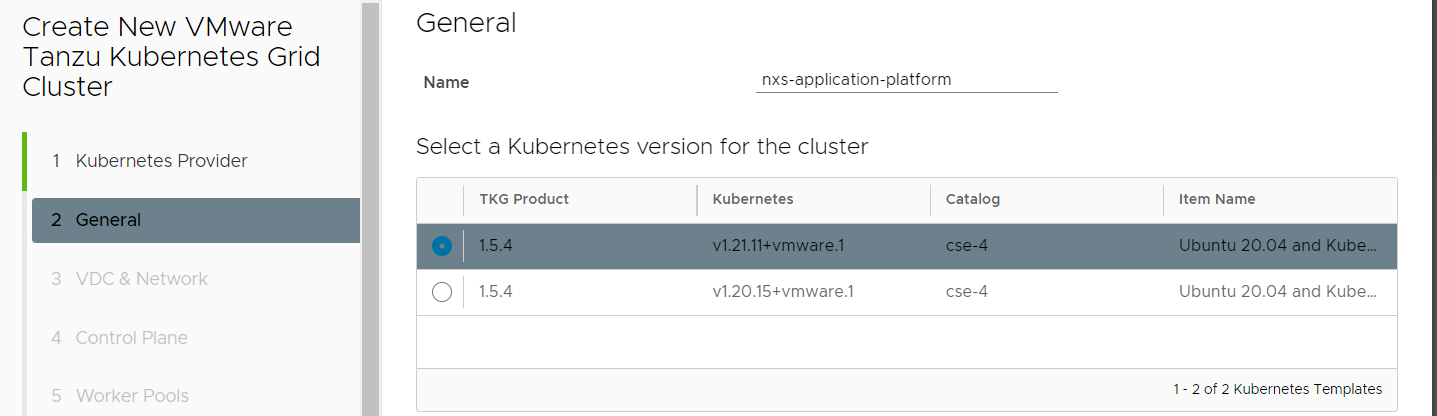

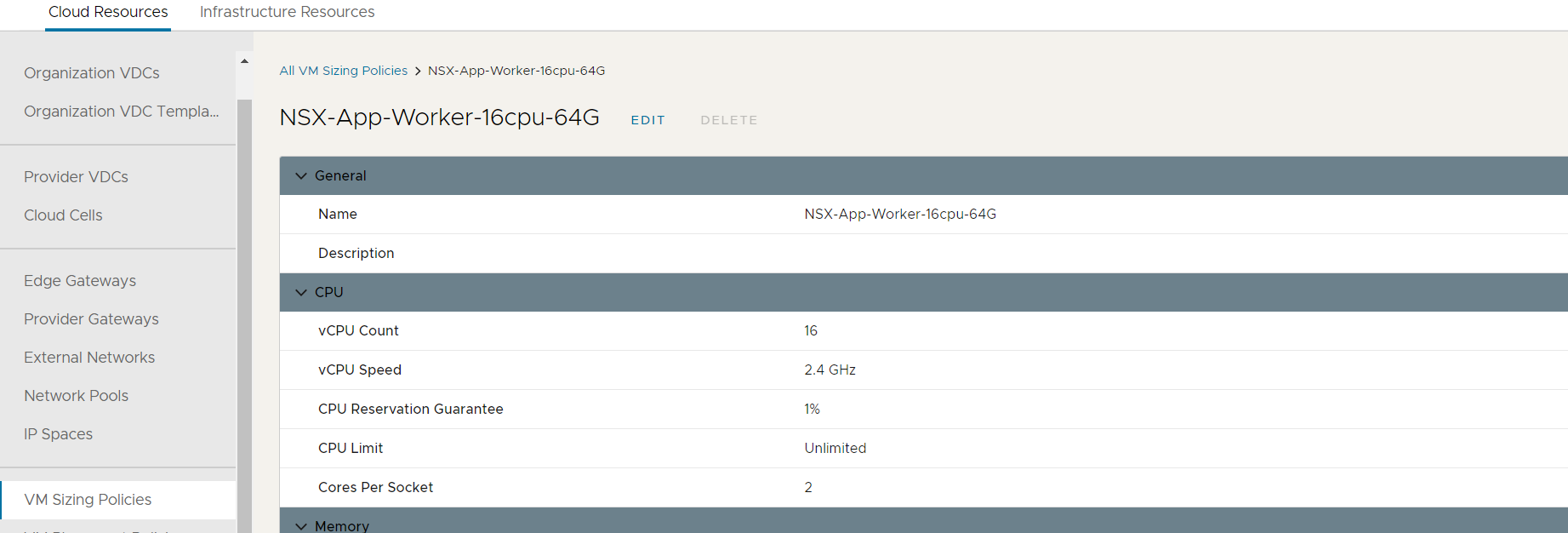

We are going to deploy NSX Application Platform in evaluation mode, according to VMware documentation you must deploy :

-

Only one Master node

-

Only one Work Node

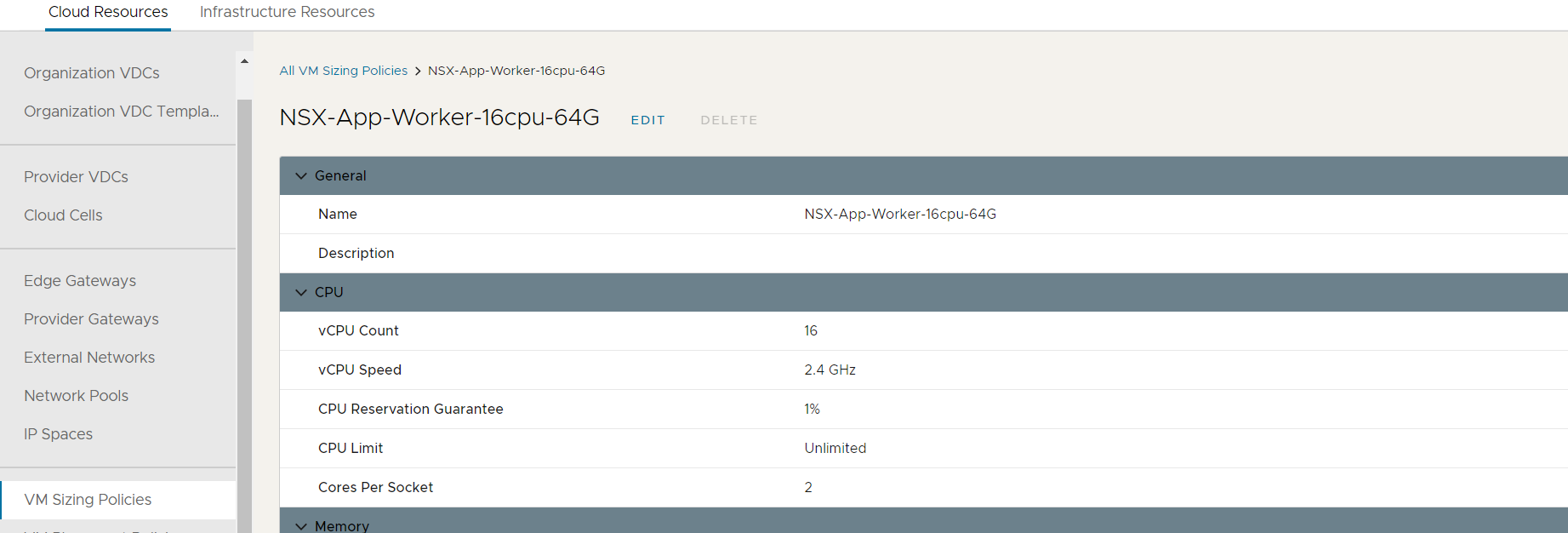

First, create a new VM sizing policy in your Cloud Director.

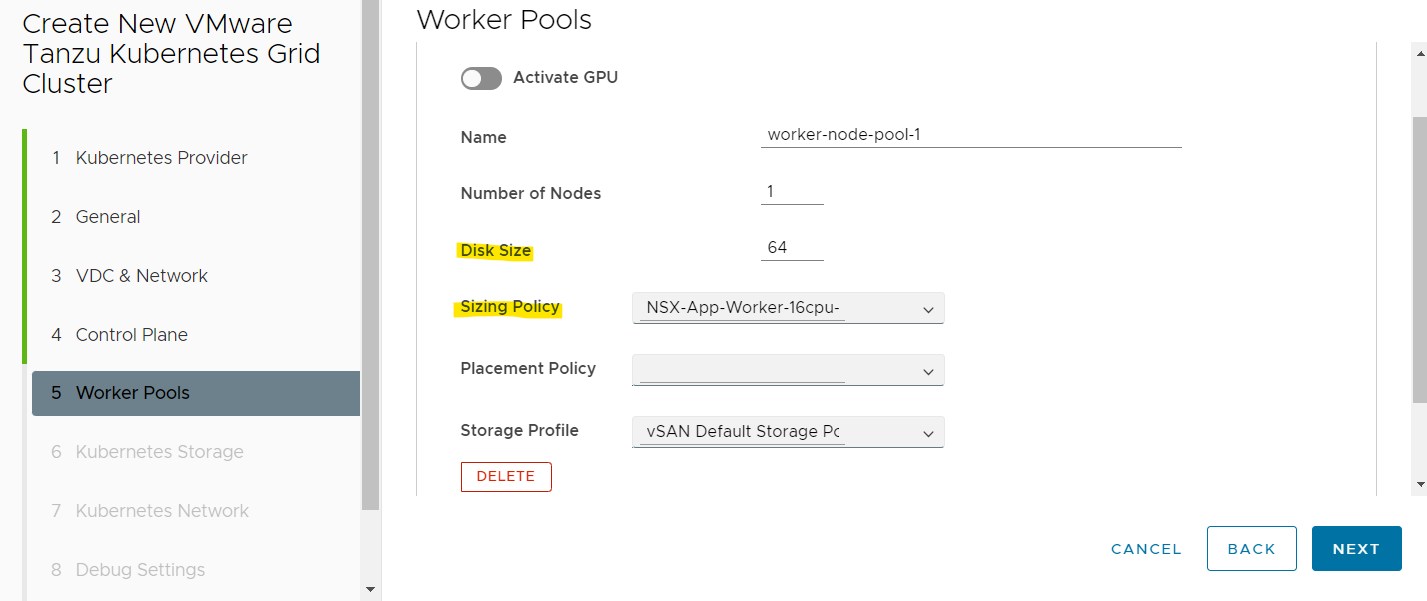

After this, you can then deploy your Kubernetes cluster with Container Service Extension.

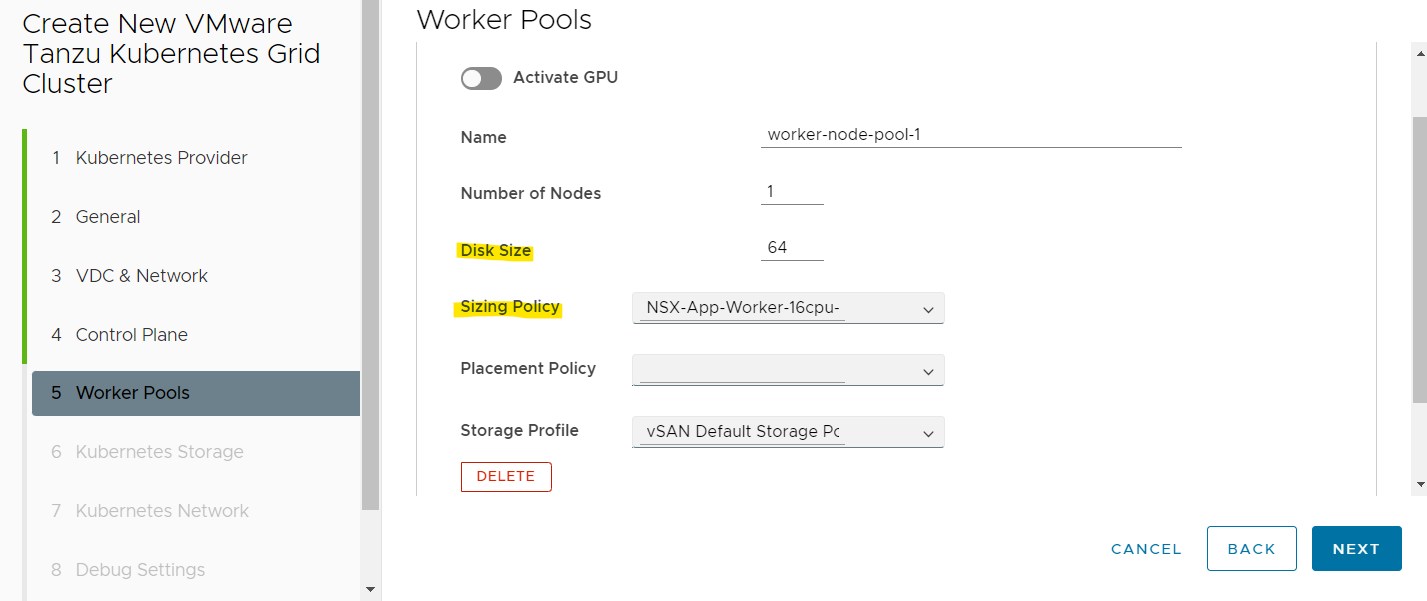

You need to increase the disk space of worker node in order to have enough free space to download the images required by NSX Application platform.

Kubernetes cluster configuration

Persistent Volumes

The default storage driver is limited to 15 PVC per worker node. However, NSX Application platorm requires around 17 or 18 PVCs per worker node. So you need to configure another storage driver. You can use the CSI NFS driver. One of my previous articles describes the installation procedure:

Setup NFS driver on Kubernetes TKGm cluster provide by Container Service Extension.

Clenning

Some components installed by default by Container Service Extension are incompatible with a new deployment of NSX Application Platform, you must remove the following components:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

|

k delete CustomResourceDefinition

k delete CustomResourceDefinition certificaterequests.cert-manager.io

k delete CustomResourceDefinition certificates.cert-manager.io

k delete CustomResourceDefinition challenges.acme.cert-manager.io

k delete CustomResourceDefinition clusterissuers.cert-manager.io

k delete CustomResourceDefinition issuers.cert-manager.io

k delete CustomResourceDefinition orders.acme.cert-manager.io

k delete clusterRole cert-manager-cainjector

k delete clusterRole cert-manager-controller-issuers

k delete clusterRole cert-manager-controller-clusterissuers

k delete clusterRole cert-manager-controller-certificates

k delete clusterRole cert-manager-controller-orders

k delete clusterRole cert-manager-controller-challenges

k delete clusterRole cert-manager-controller-ingress-shim

k delete clusterRole cert-manager-view

k delete clusterRole cert-manager-edit

k delete clusterRole cert-manager-controller-approve:cert-manager-io

k delete clusterRole cert-manager-controller-certificatesigningrequests

k delete clusterRole cert-manager-webhook:subjectaccessreviews

k delete clusterRole cert-manager-cainjector

k delete clusterRole cert-manager-cainjector

k delete clusterRole cert-manager-cainjector

k delete ClusterRoleBinding cert-manager-cainjector

k delete ClusterRoleBinding cert-manager-controller-issuers

k delete ClusterRoleBinding cert-manager-controller-clusterissuers

k delete ClusterRoleBinding cert-manager-controller-certificates

k delete ClusterRoleBinding cert-manager-controller-orders

k delete ClusterRoleBinding cert-manager-controller-challenges

k delete ClusterRoleBinding cert-manager-controller-ingress-shim

k delete ClusterRoleBinding cert-manager-controller-approve:cert-manager-io

k delete ClusterRoleBinding cert-manager-controller-certificatesigningrequests

k delete ClusterRoleBinding cert-manager-webhook:subjectaccessreview

k delete ClusterRoleBinding cert-manager-webhook:subjectaccessreviews

k delete ClusterRoleBinding cert-manager-cainjector:leaderelection

k delete ClusterRoleBinding cert-manager-cainjector

k delete roles cert-manager:leaderelection -n kube-system

k delete roles cert-manager-cainjector:leaderelection -n kube-system

k delete ClusterRoleBinding cert-manager-cainjector:leaderelection -n kube-system

k delete RoleBinding cert-manager-cainjector:leaderelection -n kube-system

k delete RoleBinding cert-manager:leaderelection -n kube-system

k delete MutatingWebhookConfiguration cert-manager-webhook -n kube-system

k delete ValidatingWebhookConfiguration cert-manager-webhook -n kube-system

k delete namespaces cert-manager

|

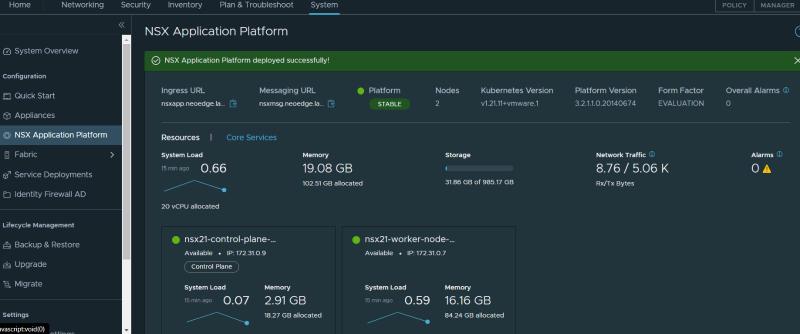

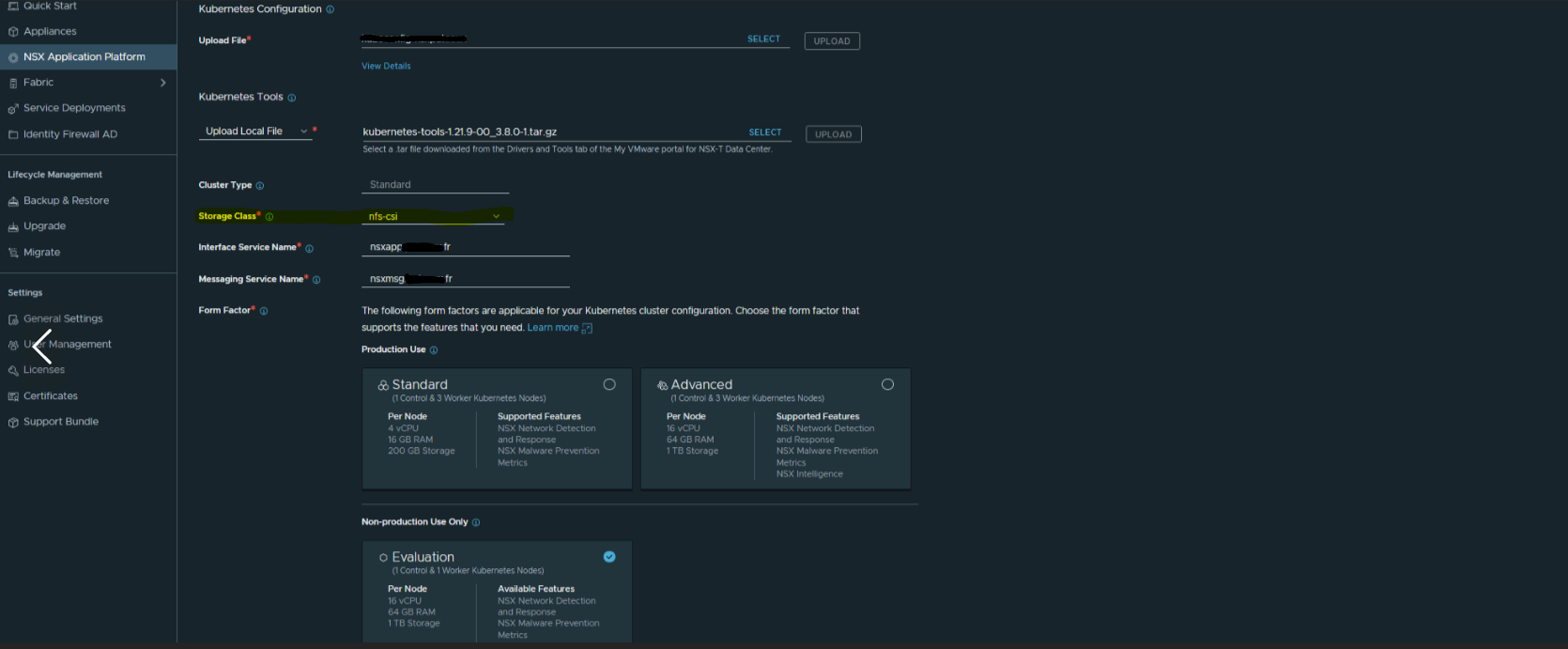

Installation

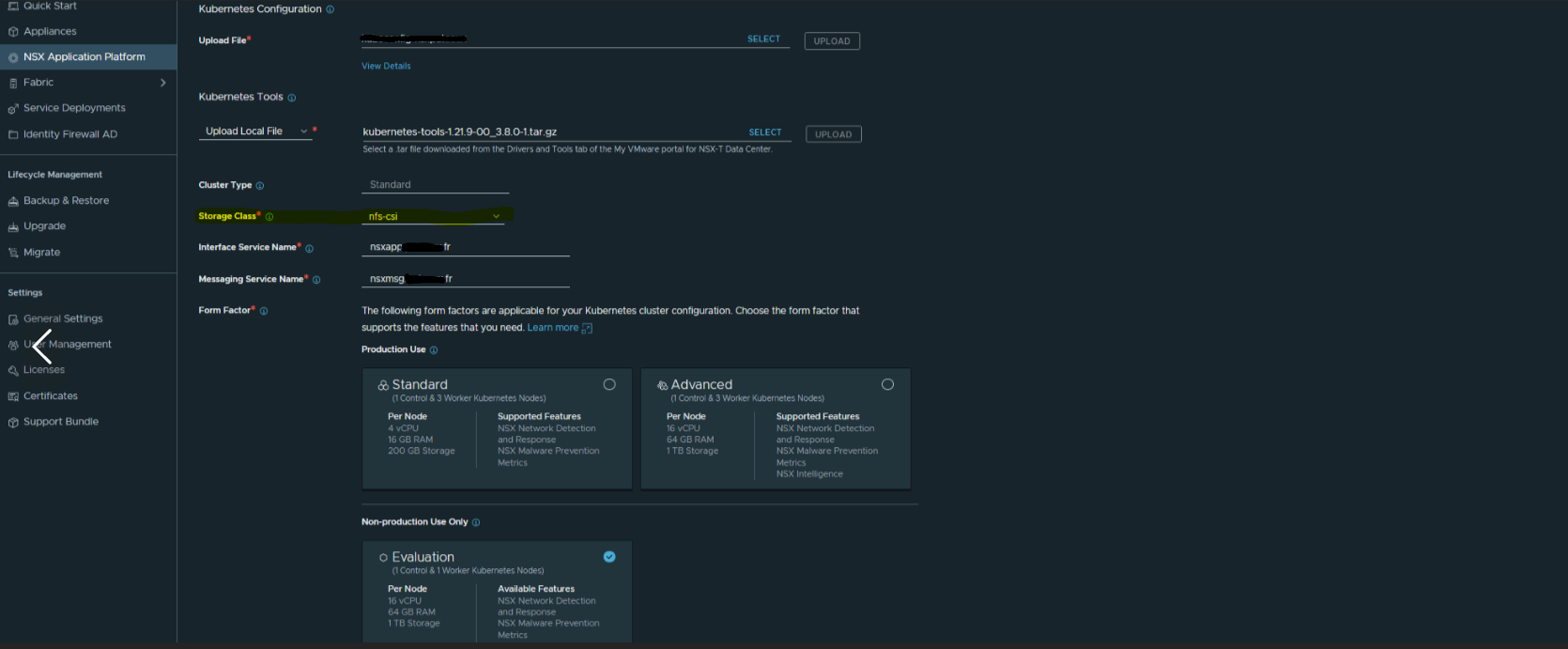

You can now launch the installation Wizzard. Don’t forget to select the appropriate storage class:

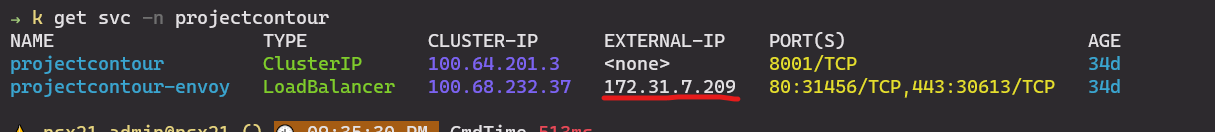

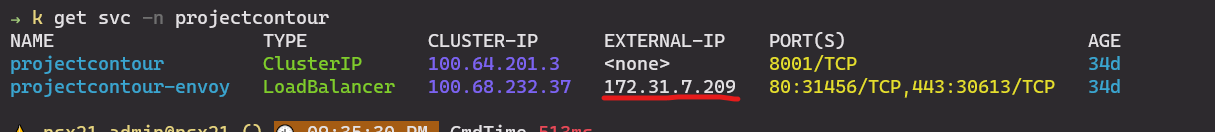

The FQDN Interface Service Name corresponds to the IP of the contour service deployed by the NSX Application platform. During deployment, you can determine the IP assigned to the Contour service and modify your DNS records.

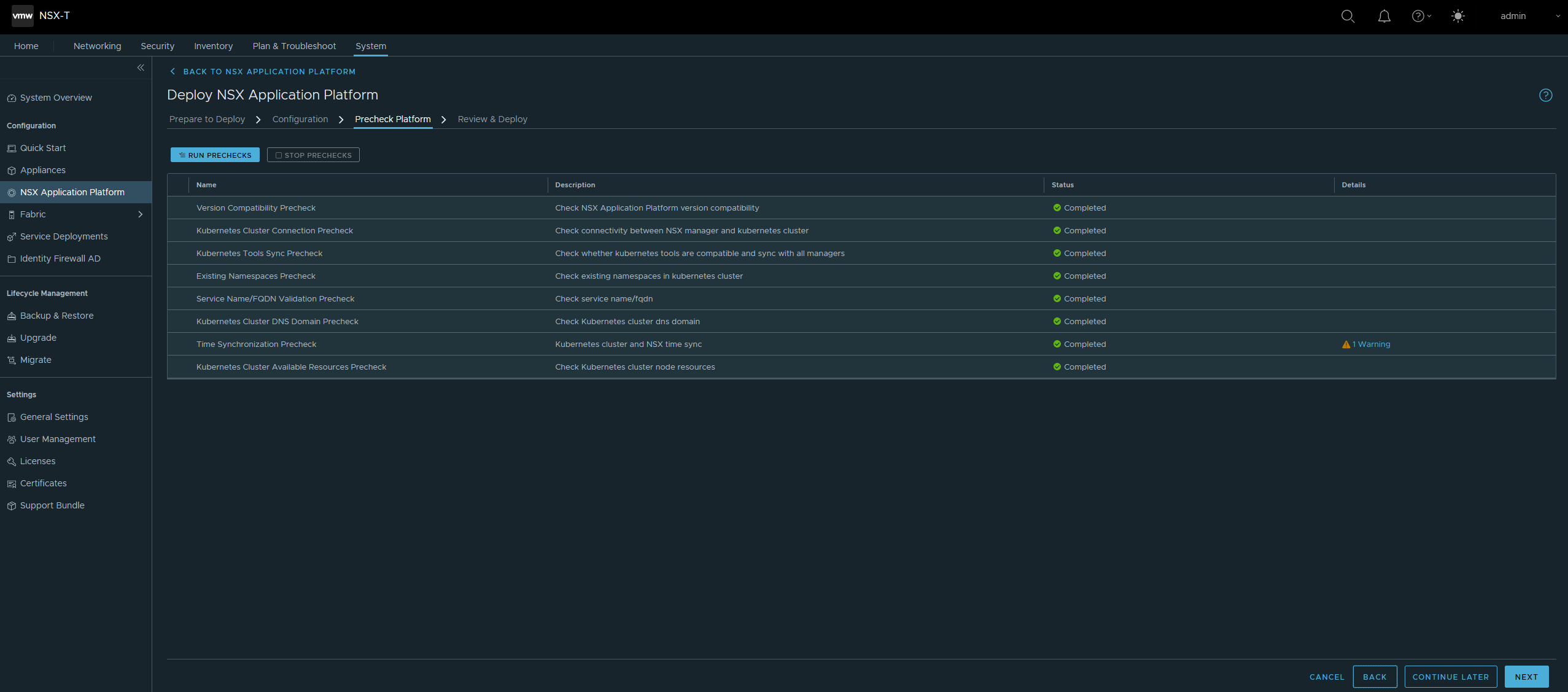

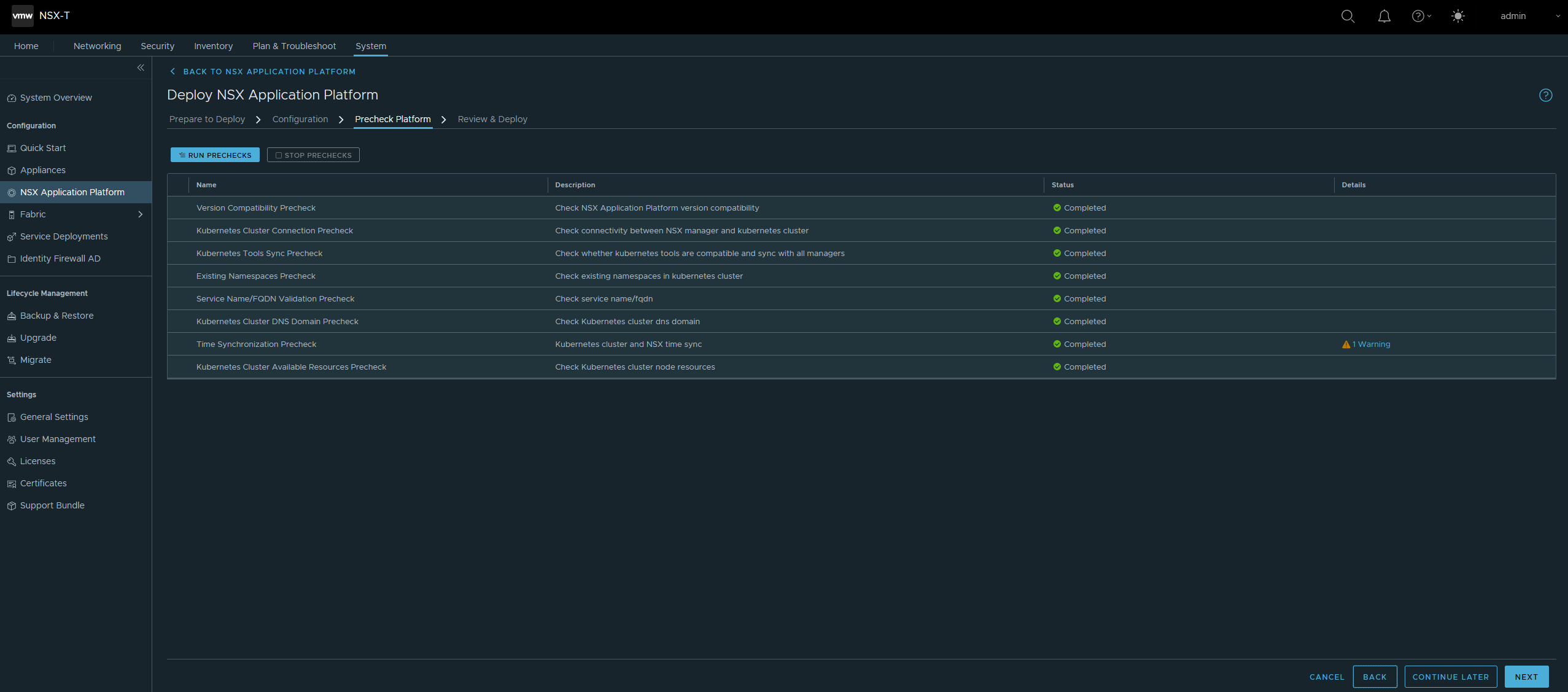

If you have correctly removed all cert-manager dependency, deployed the correct number of Master and Worker nodes, and respected the hardware resources requirement acording in to the VMware documentation, the check should be successful.

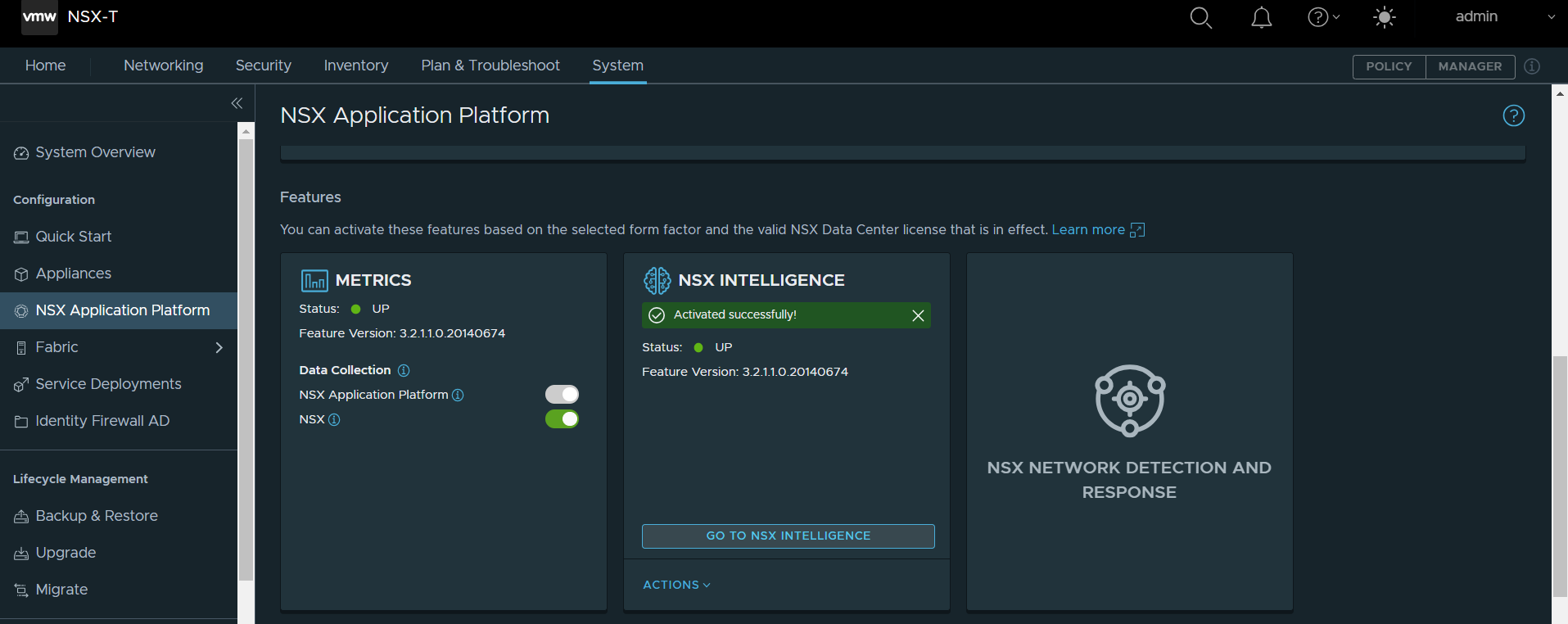

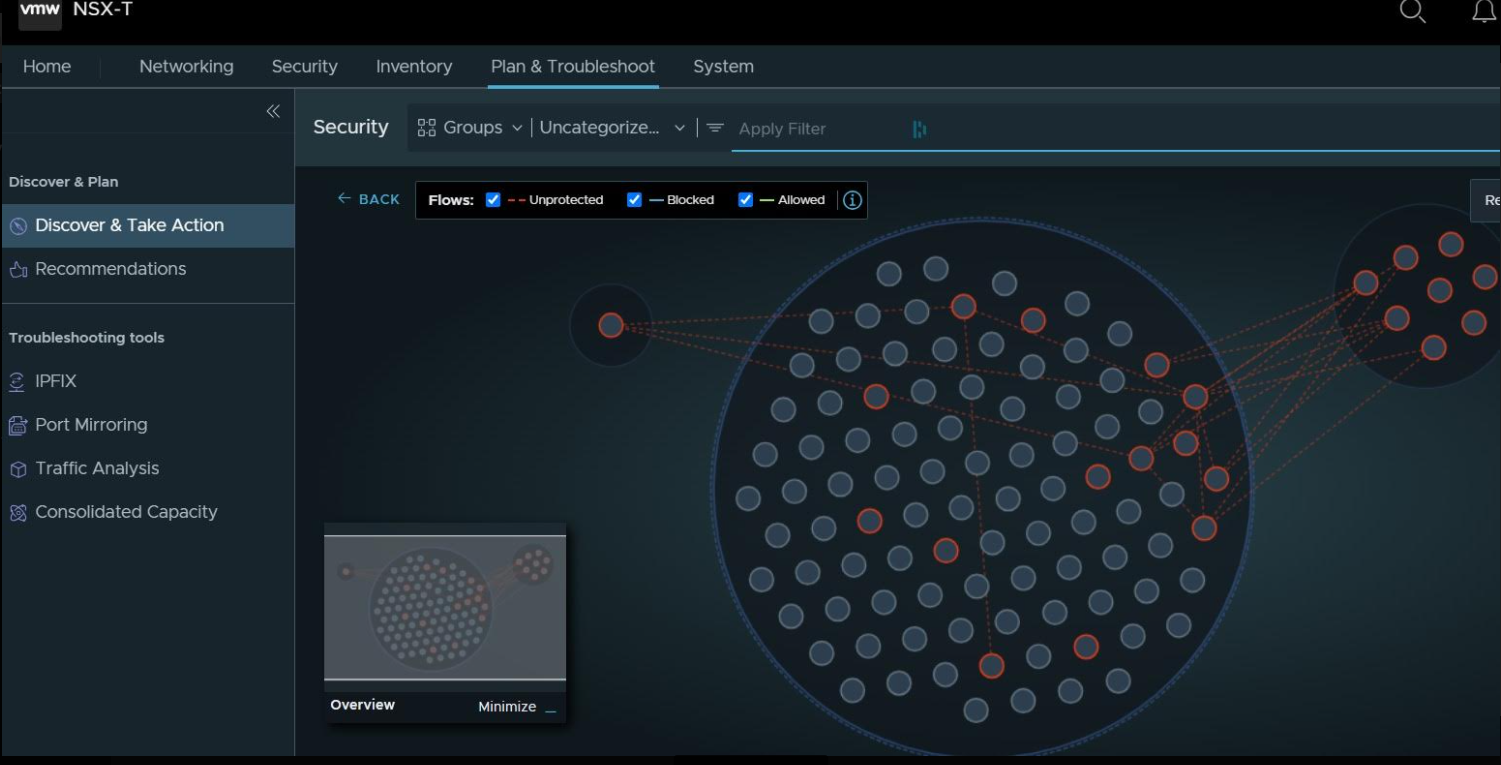

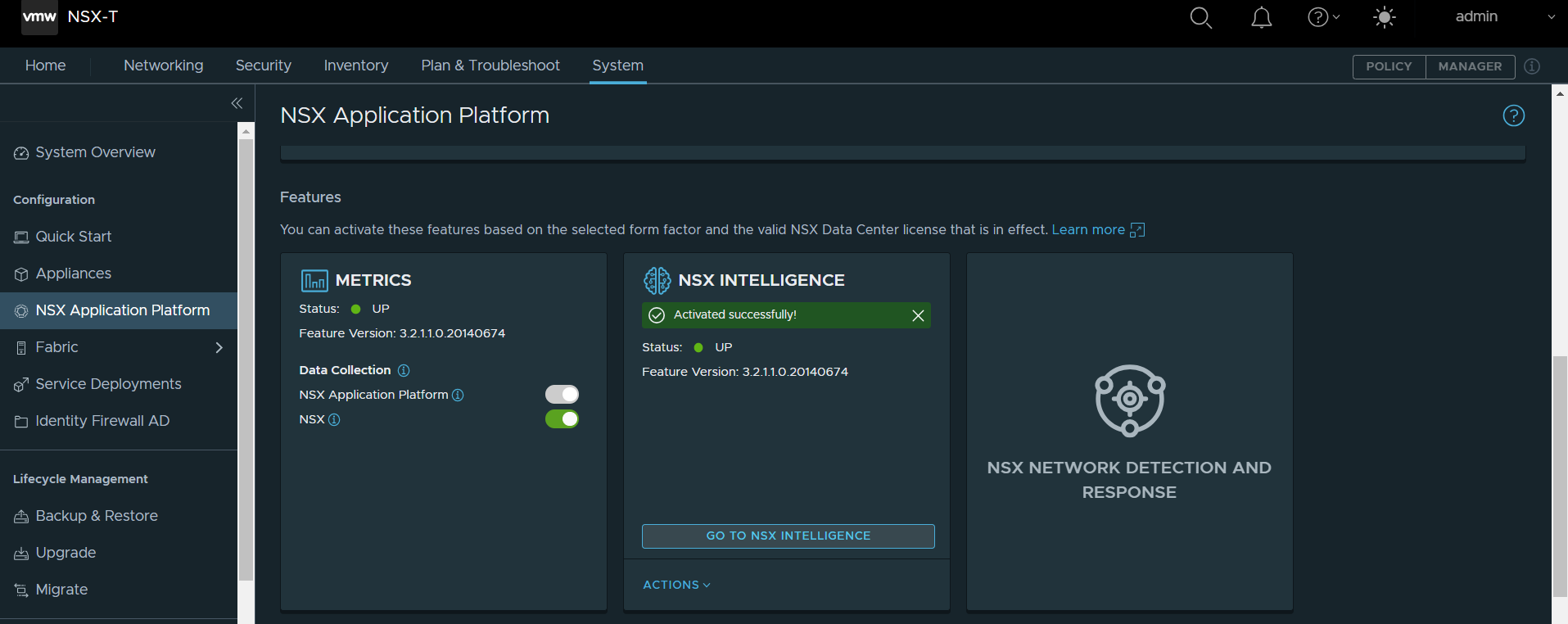

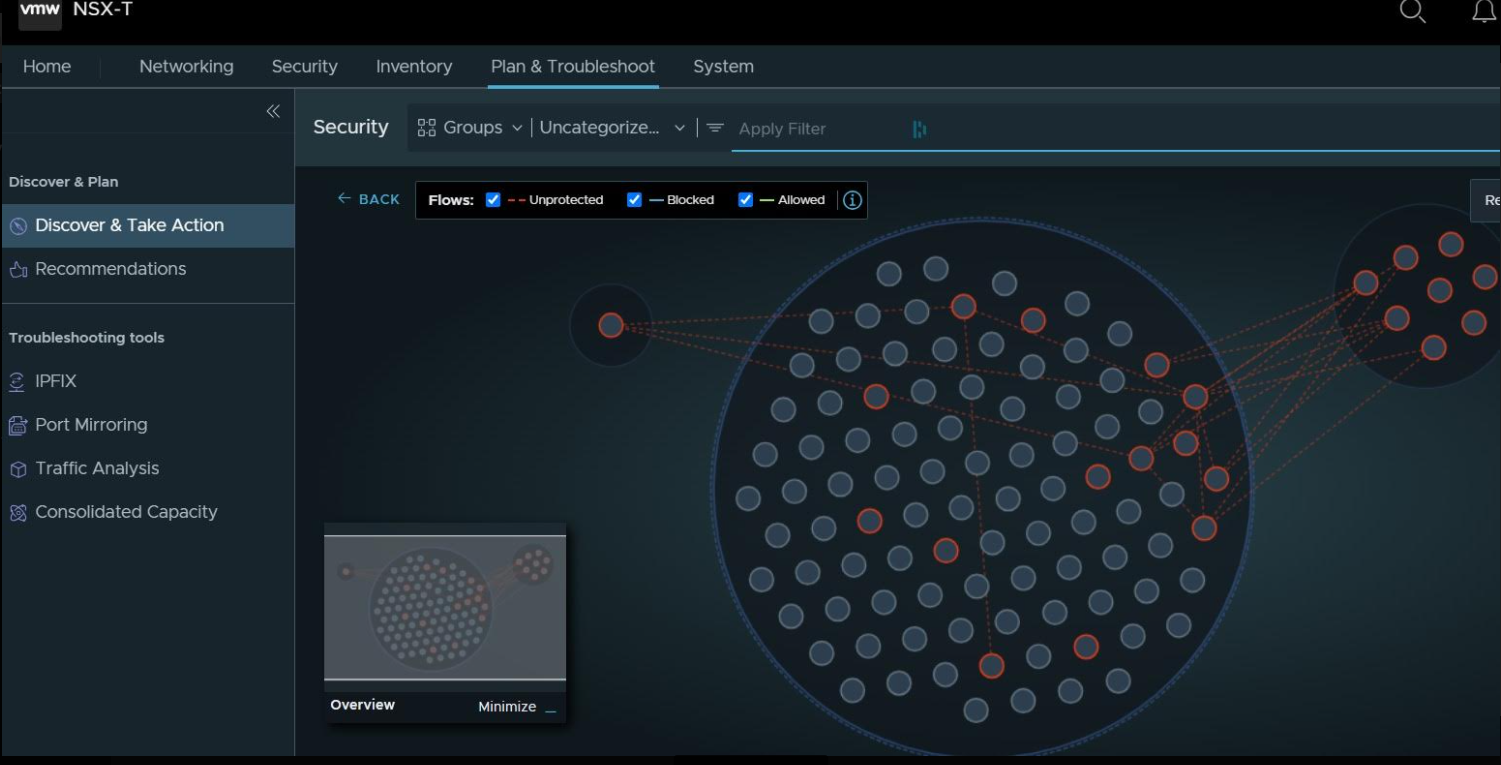

After a few minutes, you’ll be able to activate NSX Intelligence and use it on your environment.